Machine learning and artificial intelligence have had a massive impact on our world and will continue to grow. Deep learning which comes on top layer of machine learning is a big part of this change which includes creating robots and devices like smartphones and TVs smarter. Deep learning helps computers learn new things by artificial neurons in a similar way as we do. It helps in various tasks such as making your smartphone understand your voice, assisting self-driving cars to tell the difference between people and objects like lampposts and stop signs, and also helping agents control your smart gadgets. With the help of deep learning, we can train computers to understand and recognize pictures, text, and voice. Sometimes, these computer programs can even do a better job than humans. Now, as we know what deep learning is! We can explore the Top 10 Evergreen deep learning algorithms.

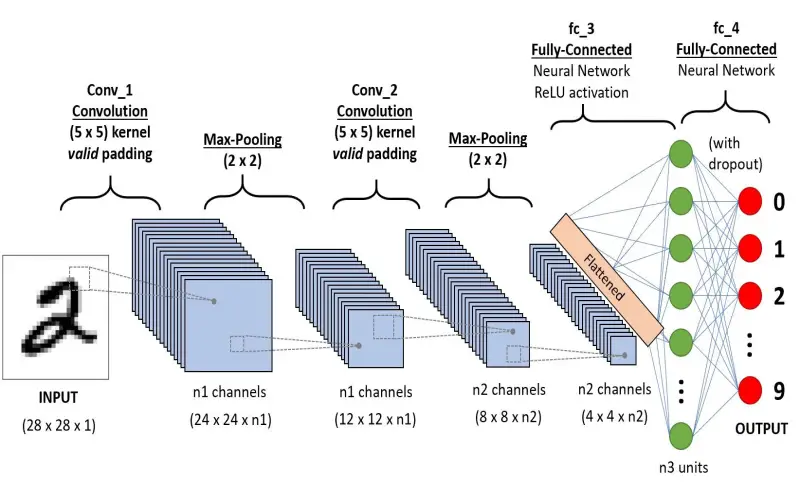

1. Convolutional Neural Networks (CNNs)

Convolutional Neural Networks (CNNs) consist of different layers that store and propagate the data. These networks are great at finding specific things in pictures. The essential parts are the Convolutional Layer which does complex calculations with filters, the Rectified Linear Unit (ReLU) which adjusts the output of layers in a specific range, and the Pooling Layer which makes the data simpler in architecture. After passing through these layers, the data changes into a long list of numbers. If we’re looking at pictures, for example, we can use this list of numbers in a different part of the network to understand what’s in the images.

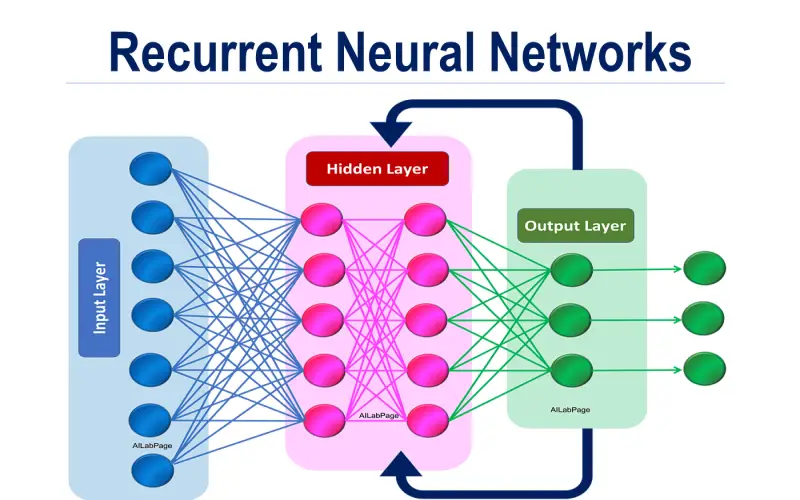

2. Recurrent Neural Networks (RNNs)

Recurrent Neural Networks (RNNs) do things in a particular type of artificial neural network. They have connections that make a loop enabling them to remember what they learned in the previous iteration. RNN works by storing and analyzing past data and current data. RNNs can handle various types of information without getting bigger. They’re good at keeping track of what they’ve seen before so that they can process more significant amounts of data together.

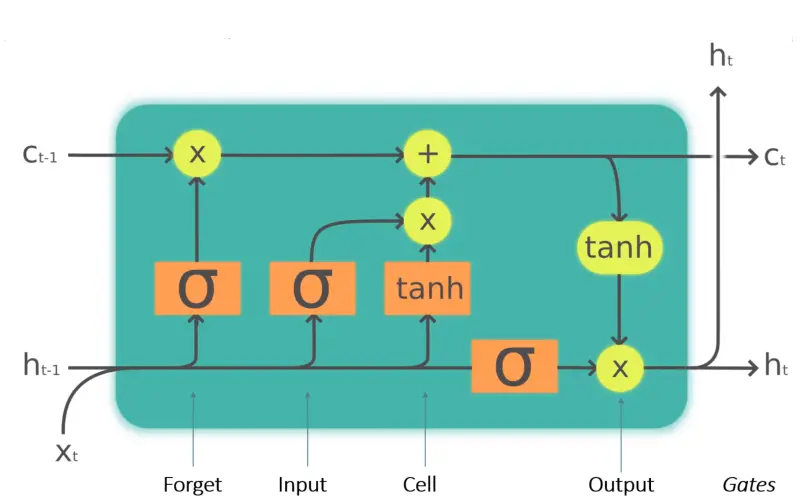

3. Long Short-Term Memory Networks (LSTMs)

Long Short-Term Memory (LSTM) which is another type of Recurrent Neural Network (RNN) is good at learning and remembering long-form data for a longer time. RNN is built so that they can remember what happened in the past data iteration. LSTMs are helpful in predictions that occur over time because they remember data that they’ve seen before. It’s structured like a chain with four layers that transfer information to each other uniquely. Other than predicting time-related information, LSTMs are also used for processing voice data, making music, and even finding new medicines.

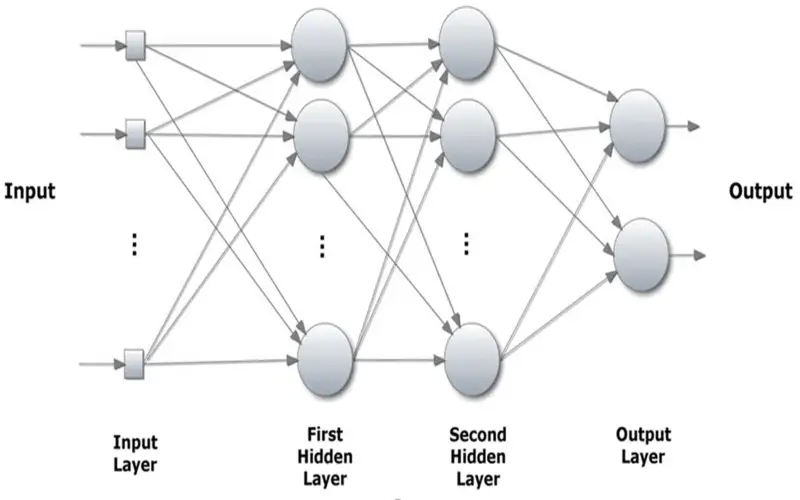

4. Multilayer Perceptrons (MLPs)

Multilayer Perceptron (MLP) is a type of neural network that involves several layers of little units more than traditional neural networks that use activation functions. MLPs have input and output layers for taking in data and giving out processed data in between filled with several interconnected artificial neural networks just like the internet. MLPs are good at understanding speech, doing translations, and recognizing speech.

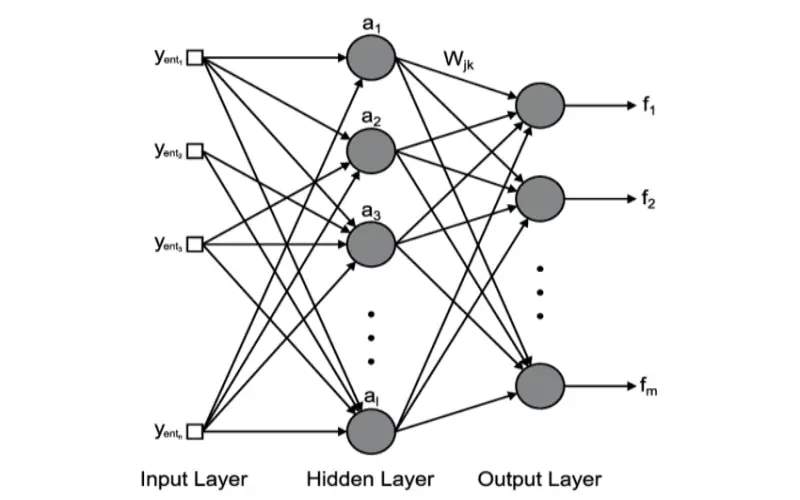

5. Radial Basis Function Networks (RBFNs)

Radial Basis Function Networks (RBFNs) are neural networks that use radial basis functions to make decisions. RBFNs consist of three main parts, which are the input layer, hidden layer, and output layer. They’re often used for sorting data into small groups, making predictions on given data and understanding time-related data. One exciting thing about RBFN is that they only have one hidden layer of neurons and only one output node, unlike any other artificial network.

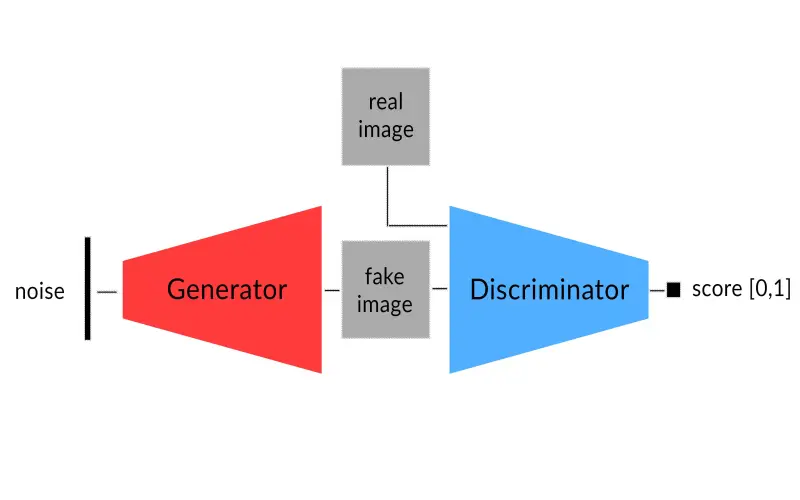

6. Generative Adversarial Networks (GANs)

A GAN, or Generative Adversarial Network, combines two deep learning techniques: a Generator and a Discriminator. The Discriminator is used to find the real, and counterfeit data, and Generator is used to create better fake data. The Generator keeps creating counterfeit data that looks real and the Discriminator keeps seeing the difference and continuing this process makes the generator good at making counterfeit images. You can use these fake images to make a picture collection instead of using real photos. Then, you’d make a particular network called a deconvolutional neural network. GANs are good at creating images and text, enhancing pictures, and can also be used to find new medicines. They’re like a creative and intelligent duel between two networks that makes things look real.

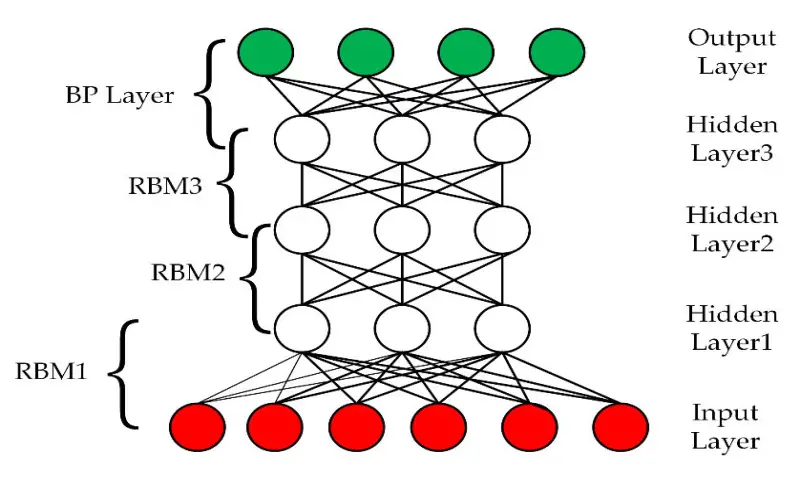

7. Deep Belief Networks (DBNs)

Deep Belief Networks (DBNs) are models that have several layers of hidden, and random variables. These variables are hidden switches and they are either on or off at a time. The top layer of DBN consists of undirected, symmetric relations between each unit, forming an associative memory. DBNs are a stack of Boltzmann Machines with connections between the layers. It is used for understanding images, videos, and data that contain motion in them.

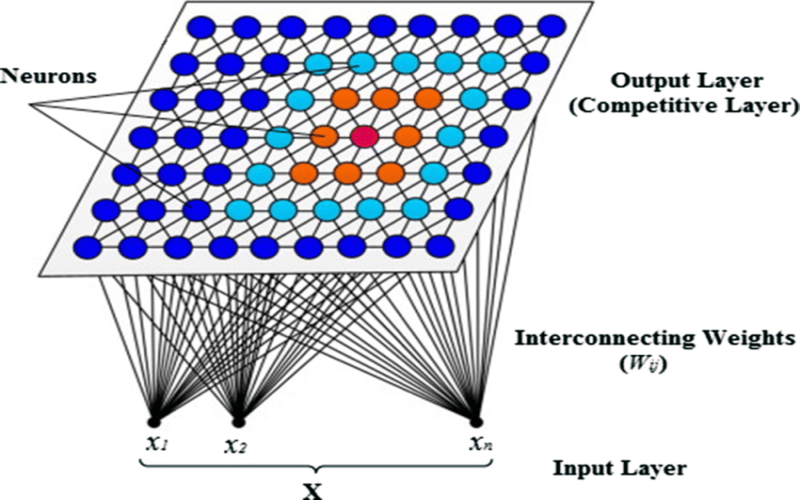

8. Self-Organizing Maps (SOMs)

Self-organizing maps (SOMs) are an innovative technique that removes the random data in a model using unsupervised data. In this approach, they make a two-dimensional model where each link in the layer connects two points. They adjust the connection strength between points in the layer to fit the input data. SOMs are great for exploring data or getting creative with music, video, and text using AI. They’re like an intelligent map that helps you find patterns in your data.

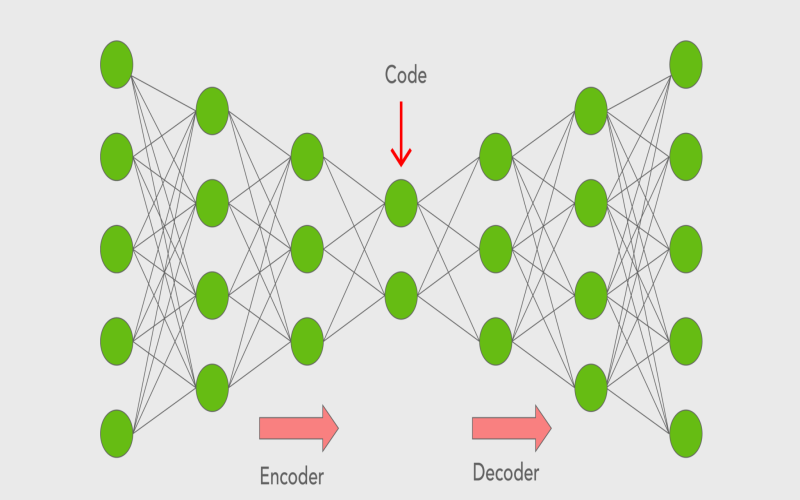

9. Autoencoders

Autoencoders are one of the most popular deep learning techniques that work as a self-driven machine. They first take input data and then use a special function to produce an output this process helps us reduce the several categories of data to structured data. Autoencoders are generally utilized for recognizing important features and objects in images, making reliable recommendation systems, and improving big datasets by structuring them properly.

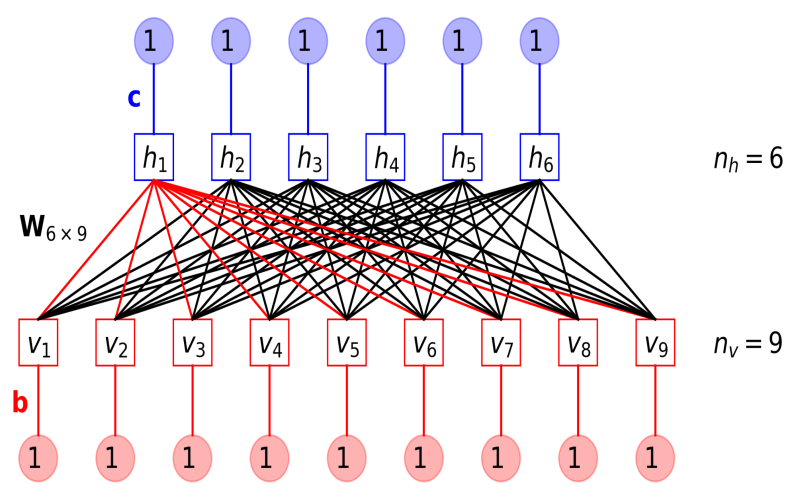

10. Restricted Boltzmann Machines (RBMs)

Boltzmann Machines are unique networks consisting of nodes connected circularly without any set direction. This creates model parameters. Unlike previously discussed network models, Boltzmann Machines are a bit random. They consist of two phases: a forward pass and a backward pass. In the forward pass, they take the inputs and turn them into encoded input numbers. In the backward pass, the numbers are changed back to the original inputs by decoding. This process uses weights, biases, and the visible layer to compare the reconstruction with the initial information.