For my computer science project I am studying linear regression. There is the concept of calculating sampling error in that topic. Please help me understand how to calculate the error.

Process Required In Calculating Sampling Error In Linear Regression

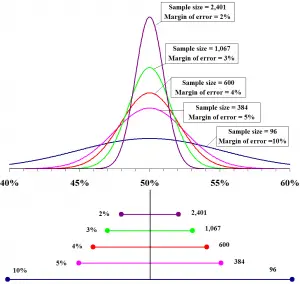

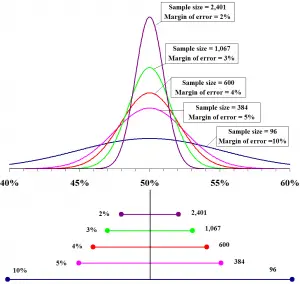

Calculating the sampling error during linear regression analysis is very easy. You just need to know the dataset first and percentage confidence level for each of the margins. Here are the steps to calculate the sampling error.

- Find the size of the sample data set (n) and proportion levels (x).

- Multiply the proportion level (x) by (1-x)

- Divide the result by n

- Take the square root of the answer

- This is called the standard error

- Multiply the result by the standard value (z*)

- You finally have the sampling error

Process Required In Calculating Sampling Error In Linear Regression

For the definition, “linear regression” is one type of statistical analysis that tries to show a relationship between two variables. It looks at different data points and plots a trend line. This type of statistical analysis can create a predictive model on obviously random data and showing trends in data like in cancer diagnoses or in stock prices. This statistical analysis is a vital tool in analytics.

The method uses statistical computations to plot a trend line in a set of data points. The trend line could be anything from the number of people diagnosed with skin cancer to the financial performance of a particular company. It shows a relationship or a connection between an independent variable and a dependent variable being studied. The computations to perform linear regressions can be pretty complex.

Luckily, linear regression models are included in most major calculation packages like Microsoft Office Excel, Mathematica, R, and MATLAB.